Mark Gurman, writing for Bloomberg:

Meta Platforms Inc. has poached Apple Inc.’s most prominent design executive in a major coup that underscores a push by the social networking giant into AI-equipped consumer devices.

The company is hiring Alan Dye, who has served as the head of Apple’s user interface design team since 2015, according to people with knowledge of the matter. Apple is replacing Dye with longtime designer Stephen Lemay, according to the people, who asked not to be identified because the personnel changes haven’t been announced.

I don’t regularly cover personnel moves here, but Alan Dye jumping over to Meta has been a big deal in the Apple news ecosystem. John Gruber, in a piece titled “Bad Dye Job“ on his Daring Fireball blog, wrote a scathing takedown of Dye, excoriating his tenure at Apple and flogging him for going over to Meta, which is arguably Apple’s arch nemesis.

Putting Alan Dye in charge of user interface design was the one big mistake Jony Ive made as Apple’s Chief Design Officer. Dye had no background in user interface design — he came from a brand and print advertising background. Before joining Apple, he was design director for the fashion brand Kate Spade, and before that worked on branding for the ad agency Ogilvy. His promotion to lead Apple’s software interface design team under Ive happened in 2015, when Apple was launching Apple Watch, their closest foray into the world of fashion. It might have made some sense to bring someone from the fashion/brand world to lead software design for Apple Watch, but it sure didn’t seem to make sense for the rest of Apple’s platforms. And the decade of Dye’s HI leadership has proven it.

I usually appreciate Gruber’s writing and take on things. He’s unafraid to tell it like it is and to be incredibly direct. Which makes people love him and fear him. But in paragraph after paragraph, Gruber just lays in on Dye.

It’s rather extraordinary in today’s hyper-partisan world that there’s nearly universal agreement amongst actual practitioners of user-interface design that Alan Dye is a fraud who led the company deeply astray. It was a big problem inside the company too. I’m aware of dozens of designers who’ve left Apple, out of frustration over the company’s direction, to work at places like LoveFrom, OpenAI, and their secretive joint venture io. I’m not sure there are any interaction designers at io who aren’t ex-Apple, and if there are, it’s only a handful. From the stories I’m aware of, the theme is identical: these are designers driven to do great work, and under Alan Dye, “doing great work” was no longer the guiding principle at Apple. If reaching the most users is your goal, go work on design at Google, or Microsoft, or Meta. (Design, of course, isn’t even a thing at Amazon.) Designers choose to work at Apple to do the best work in the industry. That has stopped being true under Alan Dye. The most talented designers I know are the harshest critics of Dye’s body of work, and the direction in which it’s been heading.

Designers can be great at more than one thing and they can evolve. Being in design leadership does not mean that you need to be the best practitioner of all the disciplines, but you do need to have the taste, sensibilities, and judgement of a good designer, no matter how you started. I’m a case in point. I studied traditional graphic design in art school. But I’ve been in digital design for most of my career now, and product design for the last 10 years.

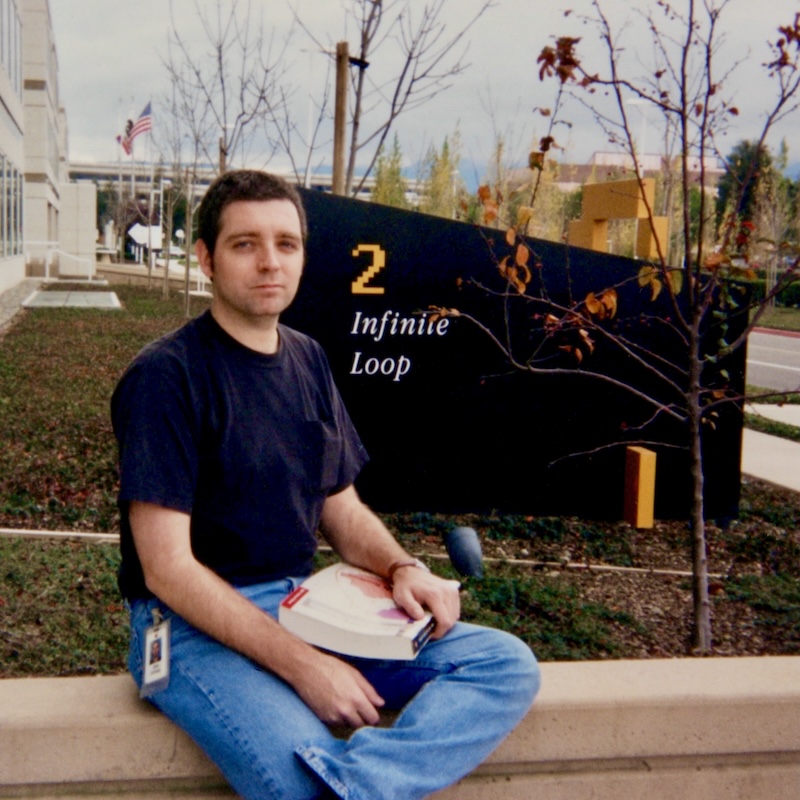

Has UI over at Apple been worse over the last 10 years? Maybe. I will need to analyze things a lot more carefully. But I vividly remember having debates with my fellow designers about Mac OS X UI choices like the pinstriping, brushed metal, and many, many inconsistencies when I was working in the Graphic Design Group in 2004. UI design has never been perfect in Cupertino.

Alan Dye isn’t a CEO and wasn’t even at the same exposure level as Jony Ive when he was still at Apple. I don’t know Dye, though we’re certainly in the same design circles—we have 20 shared connections on LinkedIn. But as far as I’m concerned, he’s a civilian because he kept a low profile, like all Apple employees.

The parasocial relationships we have with tech executives is weird. I guess it’s one thing if they have a large online presence like Instagram’s Adam Mosseri or 37signals’ David Heinemeier Hansson (aka DHH), but Alan Dye made only a couple appearances in Apple keynotes and talked about Liquid Glass. In other words, why is Gruber writing 2,500 words in this particular post, and it’s just one of five posts covering this story!

Anyway, I’m not a big fan of Meta, but maybe Dye can bring some ethics to the design team over there. Who knows. Regardless, I am wishing him well rather than taking him down.