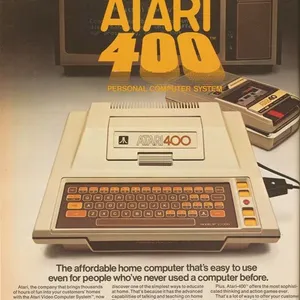

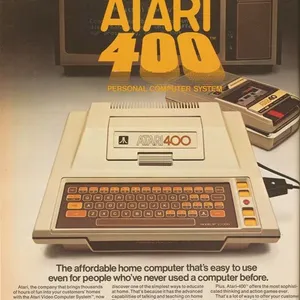

I rewatched the 1982 film TRON for the umpteenth time the other night with my wife. I have always credited this movie as the spark that got me interested in computers. Mind you, I was nine years old when this film came out. I was so excited after watching the movie that I got my father to buy us a home computer—the mighty Atari 400 (note sarcasm). I remember an educational game that came on cassette called “States & Capitals” that taught me, well, the states and their capitals. It also introduced me to BASIC, and after watching TRON, I wanted to write programs!

The Atari 400’s membrane keyboard was easy to wipe down, but terrible for typing. It also reminded me of fast food restaurant registers of the time.

Back in the early days of computing—the 1960s and ’70s—there was no distinction between users and programmers. Computer users wrote programs to do stuff for them. Hence the close relationship between the two that’s depicted in TRON. The programs in the digital world resembled their creators because they were extensions of them. Tron, the security program that Bruce Boxleitner’s character Alan Bradley wrote, looks like its creator. Clu looked like Kevin Flynn, played by Jeff Bridges. Early in the film, a compound interest program who was captured by the MCP’s goons says to a cellmate, “if I don’t have a User, then who wrote me?”

The programs in TRON looked like their users. Unless the user was the program, which was the case with Kevin Flynn (Jeff Bridges), third from left.

I was listening to a recent interview with Ivan Zhao, CEO and cofounder of Notion, in which he said he and his cofounder were “inspired by the early computing pioneers who in the ’60s and ’70s thought that computing should be more LEGO-like rather than like hard plastic.” Meaning computing should be malleable and configurable. He goes on to say, “That generation of thinkers and pioneers thought about computing kind of like reading and writing.” As in accessible and fundamental so all users can be programmers too.

The 1980s ushered in the personal computer era with the Apple IIe, Commodore 64, TRS-80, (maybe even the Atari 400 and 800), and then the Macintosh, etc. Programs were beginning to be mass-produced and consumed by users, not programmed by them. To be sure, this move made computers much more approachable. But it also meant that users lost a bit of control. They had to wait for Microsoft to add a feature into Word that they wanted.

Of course, we’re coming back to a full circle moment. In 2025, with AI-enabled vibecoding, users are able to spin up little custom apps that do pretty much anything they want them to do. It’s easy, but not trivial. The only interface is the chatbox, so your control is only as good as your prompts and the model’s understanding. And things can go awry pretty quickly if you’re not careful.

What we’re missing is something accessible, but controllable. Something with enough power to allow users to build a lot, but not so much that it requires high technical proficiency to produce something good. In 1987, Apple released HyperCard and shipped it for free with every new Mac. HyperCard, as fans declared at the time, was “programming for the rest of us.”

HyperCard—Programming for the Rest of Us

HyperCard’s welcome screen showed some useful stacks to help the user get started.

Bill Atkinson was the programmer responsible for MacPaint. After the Mac launched, and apparently on an acid trip, Atkinson conceived of HyperCard. As he wrote on the Apple history site Folklore:

Inspired by a mind-expanding LSD journey in 1985, I designed the HyperCard authoring system that enabled non-programmers to make their own interactive media. HyperCard used a metaphor of stacks of cards containing graphics, text, buttons, and links that could take you to another card. The HyperTalk scripting language implemented by Dan Winkler was a gentle introduction to event-based programming.

There were five main concepts in HyperCard: cards, stacks, objects, HyperTalk, and hyperlinks.

- Cards were screens or pages. Remember that the Mac’s nine-inch monochrome screen was just 512 pixels by 342 pixels.

- Stacks were collections of cards, essentially apps.

- Objects were the UI and layout elements that included buttons, fields, and backgrounds.

- HyperTalk was the scripting language that read like plain English.

- Hyperlinks were links from one interactive element like a button to another card or stack.

When I say that HyperTalk read like plain English, I mean it really did. AppleScript and JavaScript are descendants. Here’s a sample logic script:

if the text of field "Password" is "open sesame" then

go to card "Secret"

else

answer "Wrong password."

end if

Armed with this kit of parts, users were able to use this programming “erector set” and build all sorts of banal or wonderful apps. From tracking vinyl records to issuing invoices, or transporting gamers to massive immersive worlds, HyperCard could do it all. The first version of the classic puzzle adventure game, Myst was created with HyperCard. It was comprised of six stacks and 1,355 cards. From Wikipedia:

The original HyperCard Macintosh version of Myst had each Age as a unique HyperCard stack. Navigation was handled by the internal button system and HyperTalk scripts, with image and QuickTime movie display passed off to various plugins; essentially, Myst functions as a series of separate multimedia slides linked together by commands.

The hit game Myst was built in HyperCard.

For a while, HyperCard was everywhere. Teachers made lesson plans. Hobbyists made games. Artists made interactive stories. In the Eighties and early Nineties, there was a vibrant shareware community. Small independent developers who created and shared simple programs for a postcard, a beer, or five dollars. Thousands of HyperCard stacks were distributed on aggregated floppies and CD-ROMs. Steve Sande, writing in Rocket Yard:

At one point, there was a thriving cottage industry of commercial stack authors, and I was one of them. Heizer Software ran what was called the “Stack Exchange”, a place for stack authors to sell their wares. Like Apple with the current app stores, Heizer took a cut of each sale to run the store, but authors could make a pretty good living from the sale of popular stacks. The company sent out printed catalogs with descriptions and screenshots of each stack; you’d order through snail mail, then receive floppies (CDs at a later date) with the stack(s) on them.

Heizer Software’s “Stack Exchange,” a marketplace for HyperCard authors.

From Stacks to Shrink-Wrap

But even as shareware tiny programs and stacks thrived, the ground beneath this cottage industry was beginning to shift. The computer industry—to move from niche to one in every household—professionalized and commoditized software development, distribution, and sales. By the 1990s, the dominant model was packaged software that was merchandised on store shelves in slick shrink-wrapped boxes. The packaging was always oversized for the floppy or CD it contained to maximize visual space.

Unlike the users/programmers from the ’60s and ’70s, you didn’t make your own word processor anymore, you bought Microsoft Word. You didn’t build your own paint and retouching program—you purchased Adobe Photoshop. These applications were powerful, polished, and designed for thousands and eventually millions of users. But that meant if you wanted a new feature, you had to wait for the next upgrade cycle—typically a couple of years. If you had an idea, you were constrained by what the developers at Microsoft or Adobe decided was on the roadmap.

The ethos of tinkering gave way to the economics of scale. Software became something you consumed rather than created.

From Shrink-Wrap to SaaS

The 2000s took that shift even further. Instead of floppy disks or CD-ROMs, software moved into the cloud. Gmail replaced the personal mail client. Google Docs replaced the need for a copy of Word on every hard drive. Salesforce, Slack, and Figma turned business software into subscription services you didn’t own, but rented month-to-month.

SaaS has been a massive leap for collaboration and accessibility. Suddenly your documents, projects, and conversations lived everywhere. No more worrying about hard drive crashes or lost phones! But it pulled users even farther away from HyperCard’s spirit. The stack you made was yours; the SaaS you use belongs to someone else’s servers. You can customize workflows, but you don’t own the software.

For what started out as a note-taking app, Notion has come a long way. With its kit of parts—pages, databases, tags, etc.—it’s highly configurable for tracking information. But you can’t make games with it. Nor can you really tell interactive stories (sure, you can link pages together). You also can’t distribute what you’ve created and share with the rest of the world. (Yes, you can create and sell Notion templates.)

No productivity software programs are malleable in the HyperCard sense.

[IMAGE: Director]

Of course, there are specialized tools for creativity. Unreal Engine and Unity are great for making games. Director and Flash continued the tradition started by HyperCard—at least in the interactive media space—before they were supplanted by more complex HTML5, CSS, and JavaScript. Objectively, these authoring environments are more complex than HyperCard ever was.

The Web’s HyperCard DNA

In a fun remembrance, Constantine Frantzeskos writes:

HyperCard’s core idea was linking cards and information graphically. This was true hypertext before HTML. It’s no surprise that the first web pioneers drew direct inspiration from HyperCard – in fact, HyperCard influenced the creation of HTTP and the Web itself. The idea of clicking a link to jump to another document? HyperCard had that in 1987 (albeit linking cards, not networked documents). The pointing finger cursor you see when hovering over a web link today? That was borrowed from HyperCard’s navigation cursor.

Ted Nelson coined the terms “hypertext” and “hyperlink” in the mid-1960s, envisioning a world where digital documents could be linked together in nonlinear “trails”—making information interwoven and easily navigable. Bill Atkinson’s HyperCard was the first mass-market program that popularized this idea, even influencing Tim Berners-Lee, the father of the World Wide Web. Berners-Lee’s invention was about linking documents together on a server and linking to other documents on other servers. A web of documents.

Early web browser from 1993, ViolaWWW, directly inspired by the concepts in HyperCard.

Pei-Yuan Wei, developer of one of the first web browsers called ViolaWWW, also drew direct inspiration from HyperCard. Matthew Lasar writing for Ars Technica:

“HyperCard was very compelling back then, you know graphically, this hyperlink thing,” Wei later recalled. “I got a HyperCard manual and looked at it and just basically took the concepts and implemented them in X-windows,” which is a visual component of UNIX. The resulting browser, Viola, included HyperCard-like components: bookmarks, a history feature, tables, graphics. And, like HyperCard, it could run programs.

And of course, with the built-in source code viewer, browsers brought on a new generation of tinkerers who’d look at HTML and make stuff by copying, tweaking, and experimenting.

The Missing Ingredient: Personal Software

Today, we have low-code and no code tools like Bubble for making web apps, Framer for building web sites, and Zapier for automations. The tools are still aimed at professionals though. Maybe with the exception of Zapier and IFTTT, they’ve expanded the number of people who can make software (including websites), but they’re not general purpose. These are all adjacent to what HyperCard was.

(Re)enter personal software.

In an essay titled “Personal software,” Lee Robinson wrote, “You wouldn’t search ‘best chrome extensions for note taking’. You would work with AI. In five minutes, you’d have something that works exactly how you want.”

Exploring the idea of “malleable software,” researchers at Ink & Switch wrote:

How can users tweak the existing tools they’ve installed, rather than just making new siloed applications? How can AI-generated tools compose with one another to build up larger workflows over shared data? And how can we let users take more direct, precise control over tweaking their software, without needing to resort to AI coding for even the tiniest change? None of these questions are addressed by products that generate a cloud-hosted application from a prompt.

Of course, AI prompt-to-code tools have been emerging this year, allowing anyone who can type to build web applications. However, if you study these tools more closely—Replit, Lovable, Base44, etc.—you’ll find that the audience is still technical people. Developers, product managers, and designers can understand what’s going on. But not everyday people.

These tools are still missing ingredients HyperCard had that allowed it to be in the general zeitgeist for a while, that enabled users to be programmers again.

They are:

- Direct manipulation

- Technical abstraction

- Local apps

Direct Manipulation

As I concluded in my exhaustive AI prompt-to-code tools roundup from April, “We need to be able to directly manipulate components by clicking and modifying shapes on the canvas or changing values in an inspector.” The latency of the roundtrip of prompting the model, waiting for it to think and then generate code, and then rebuild the app is much too long. If you don’t know how to code, every change takes minutes, so building something becomes tedious, not fun.

Tools need to be a canvas-first, not chatbox-first. Imagine a kit of UI elements on the left that you can drag onto the canvas and then configure and style—not unlike WordPress page builders.

AI is there to do the work for you if you want, but you don’t need to use it.

My sketch of the layout of what a modern HyperCard successor could look like. A directly manipulatable canvas is in the center, object palette on the left, and AI chat panel on the right.

Technical Abstraction

For gen pop, I believe that these tools should hide away all the JavaScript, TypeScript, etc. The thing that the user is building should just work.

Additionally, there’s an argument to be made to bring back HyperTalk or something similar. Here is the same password logic I showed earlier, but in modern-day JavaScript:

const password = document.getElementById("Password").value;

if (password === "open sesame") {

window.location.href = "secret.html";

} else {

alert("Wrong password.");

}

No one is going to understand that, much less write something like it.

One could argue that the user doesn’t need to understand that code since the AI will write it. Sure, but code is also documentation. If a user is working on an immersive puzzle game, they need to know the algorithm for the solution.

As a side note, I think flow charts or node-based workflows are great. Unreal Engine’s Blueprints visual scripting is fantastic. Again, AI should be there to assist.

Unreal Engine has a visual scripting interface called Blueprints, with node blocks connected by wires representing game logic.

Local Apps

HyperCard’s file format was “stacks.” And stacks could be compiled into applications that can be distributed without HyperCard. With today’s cloud-based AI coding tools, they can all publish a project to a unique URL for sharing. That’s great for prototyping and for personal use, but if you wanted to distribute it as shareware or donation-ware, you’d have to map it to a custom domain name. It’s not straightforward to purchase from a registrar and deal with DNS records.

What if these web apps can be turned into a single exchangeable file format like “.stack” or some such? Furthermore, what if they can be wrapped into executable apps via Electron?

Rip, Mix, Burn

Lovable, v0, and others already have sharing and remixing built in. This ethos is great and builds on the philosophies of the hippie computer scientists. In addition to fostering a remix culture, I imagine a centralized store for these apps. Of course, those that are published as runtime apps can go through the official Apple and Google stores if they wish. Finally, nothing stops third-party stores, similar to the collections of stacks that used to be distributed on CD-ROMs.

AI as Collaborator, Not Interface

As mentioned, AI should not be the main UI for this. Instead, it’s a collaborator. It’s there if you want it. I imagine that it can help with scaffolding a project just by describing what you want to make. And as it’s shaping your app, it’s also explaining what it’s doing and why so that the user is learning and slowly becoming a programmer too.

Democratizing Programming

When my daughter was in middle school, she used a site called Quizlet to make flash cards to help her study for history tests. There were often user-generated sets of cards for certain subjects, but there were never sets specifically for her class, her teacher, that test. With this HyperCard of the future, she would be able to build something custom in minutes.

Likewise, a small business owner who runs an Etsy shop selling T-shirts can spin up something a little more complicated to analyze sales and compare against overall trends in the marketplace.

And that same Etsy shop owner could sell the little app they made to others wanting the same tool for for their stores.

The Future Is Close

Tron talks to his user, Alan Bradley, via a communication beam.

In an interview with Garry Tan of Y Combinator in June, Michael Truell, the CEO of Anysphere, which is the company behind Cursor, said his company’s mission is to “replace coding with something that’s much better.” He acknowledged that coding today is really complicated:

Coding requires editing millions of lines of esoteric formal programming languages. It requires doing lots and lots of labor to actually make things show up on the screen that are kind of simple to describe.

Truell believes that in five to ten years, making software will boil down to “defining how you want the software to work and how you want the software to look.”

In my opinion, his timeline is a bit conservative, but maybe he means for professionals. I wonder if something simpler will come along sooner that will capture the imagination of the public, like ChatGPT has. Something that will encourage playing and tinkering like HyperCard did.

There’s a third sequel to TRON that’s coming out soon—TRON: Ares. In a panel discussion in the 5,000-seat Hall H at San Diego Comic-Con earlier this summer, Steven Lisberger, the creator of the franchise provided this warning about AI, “Let’s kick the technology around artistically before it kicks us around.” While he said it as a warning, I think it’s an opportunity as well.

AI opens up computer “programming” to a much larger swath of people—hell, everyone. As an industry, we should encourage tinkering by building such capabilities into our products. Not UIs on the fly, but mods as necessary. We should build platforms that increase the pool of users from technical people to everyday users like students, high school teachers, and grandmothers. We should imagine a world where software is as personalizable as a notebook—something you can write in, rearrange, and make your own. And maybe users can be programmers once again.